Hochschule Rhein-Waal (HSRW; https://www.hochschule-rhein-waal.de/en) is one of the scientific partners within the SPECTORS project and is responsible to link scientific development and industrial use between partners. Through close collaboration with different partners within the SPECTORS project, HSRW had made progress in different applications.

On-premise cluster environment for remote sensing applications

Knowing the importance of storing, processing and analyzing a huge amount of data in the area of environment protection, nature conservation, and precision farming, HSRW has been working on a cost- and resource-effective on-premise cluster environment for remote sensing applications with the support of KnowH2O and Wageningen University & Research.

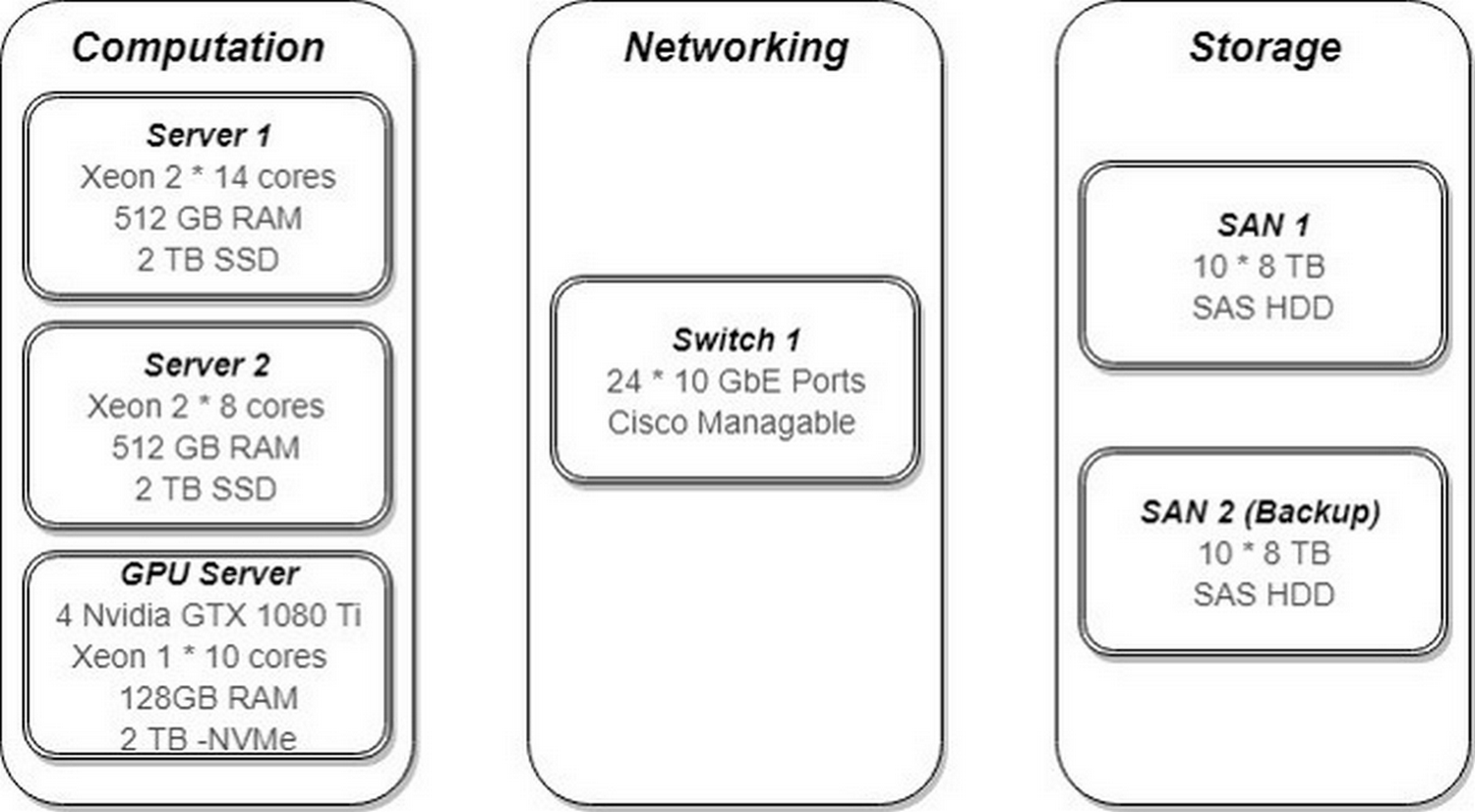

The main hardware resources acquired by HSRW so far included:

- two CPU-based servers each with 512 GB RAM, 2 TB SSD, and 2x Intel Xeon processors each (one with 2 x 14 cores and the other with 2 x 8 cores);

- one GPU-based server with 128 GB RAM, 2 TB NVMe SSD, 1 x 10-core Intel Xeon CPU and 4 Nvidia GTX 1080 Ti GPUs; and

- two 80 TB storage area network (SAN) devices acting as storage and backup.

These nodes were interconnected with a 10 Gbps ethernet switch.

One important use-case for the setup cluster is to perform computationally intensive photogrammetry processes. Within the scope of the SPECTORS project, a lot of research work was done using airborne remote sensing technologies. In order to obtain useful information from the aerial images, one crucial step is to create georeferenced digital elevation models (DEMs) and orthomosaics. Once the models and/or orthomosaics have been created, they can be used in applications such as precision agriculture, nature conservation, and land surveying. Preliminary evaluation showed that the system, when equipped with open-source tools and frameworks, enabled fast and efficient photogrammetry processes. These could enhance the competitiveness of small-and-medium-sized enterprises (SMEs) who have limited resources.

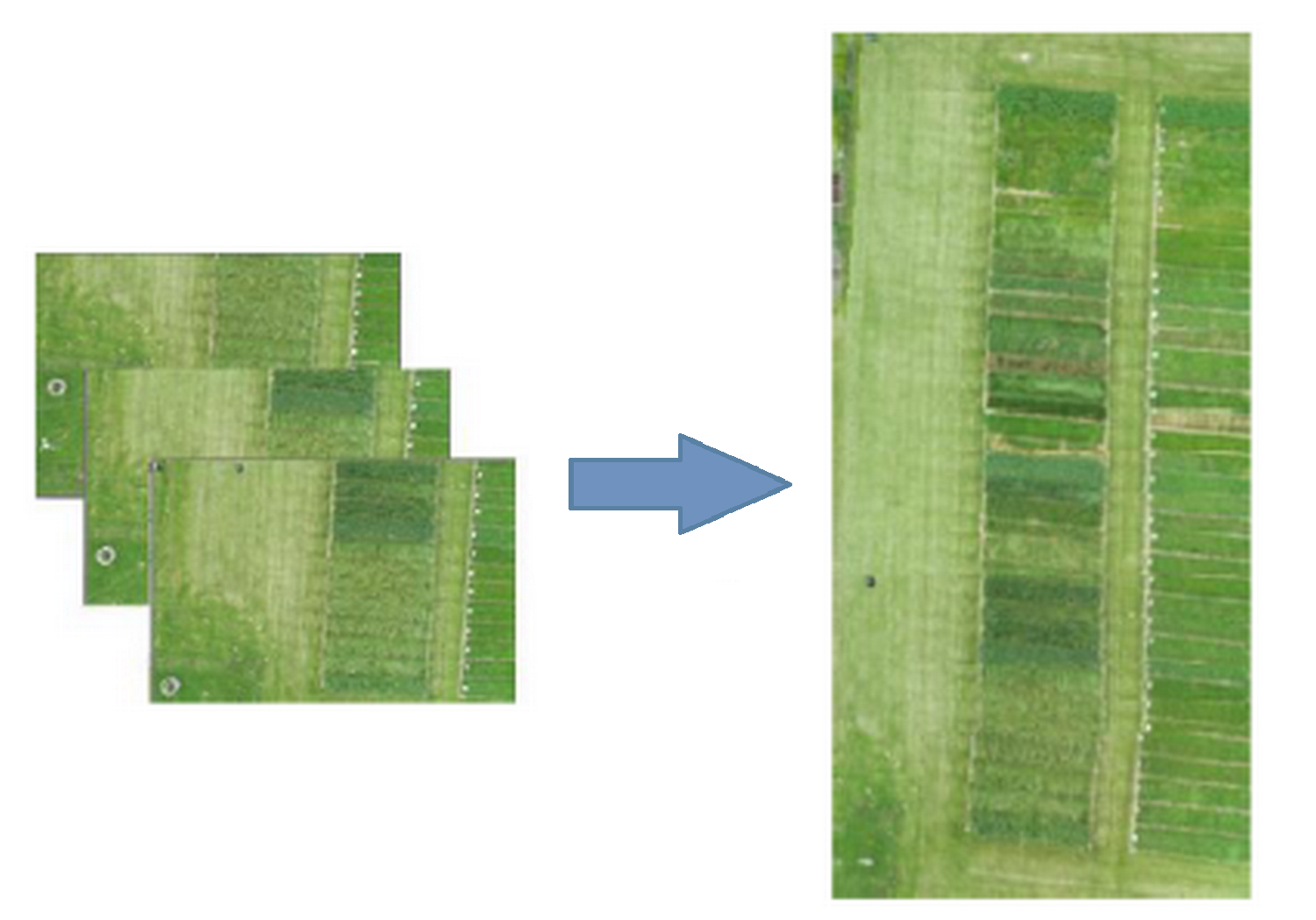

Soft real-time stitching for in-flight reconstruction

Besides the on-premise cluster that could be used to generate fast DEMs and orthomosaics for further analysis, HSRW also investigated the possibility of faster reconstruction of the area of interest in order to assess the mission coverage and make decisions on-flight. Using the available information of the inertial measurement unit of the drone, HSRW worked on an algorithm to reduce the processing time of generating a mosaic. Initial tests had demonstrated a promising result – the developed algorithm was able to construct 39 unmanned aerial vehicle (UAV) images into a mosaic in less than 30 seconds.

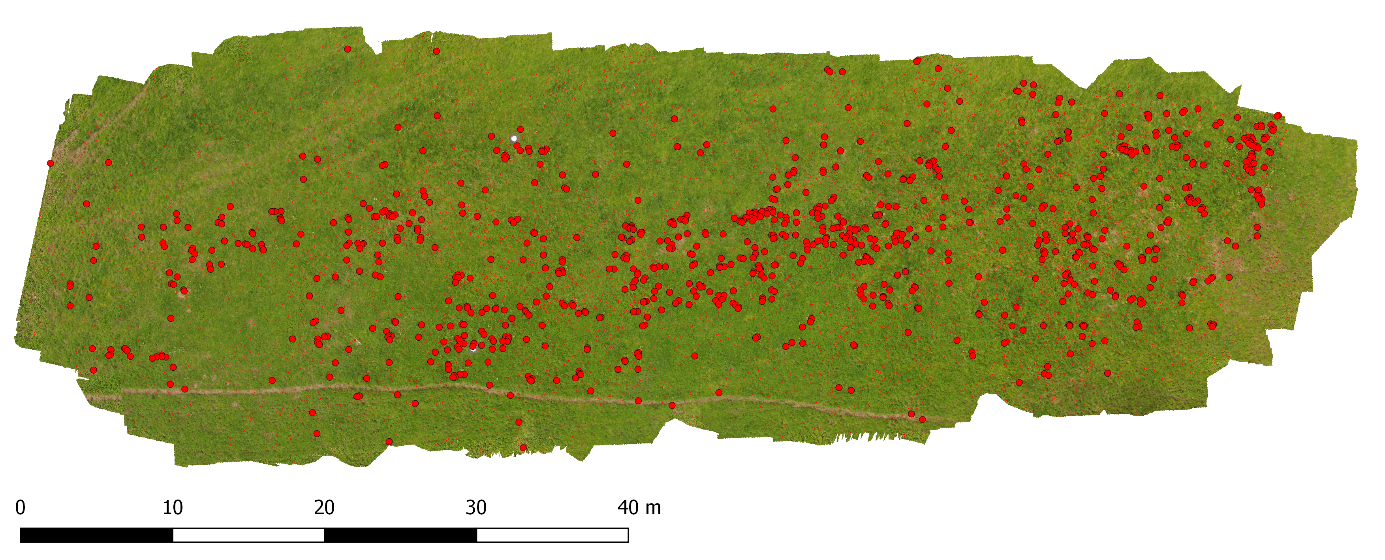

Weed mapping in grassland using UAV imagery

In collaboration with the Naturschutzzentrum im Kreis Kleve e.V., HSRW had developed an open-source workflow to map selected weed species (Rumex obtusifolius) in grasslands using UAV RGB imagery. Rumex obtusifolius is one of the most common non-cultivated weed in European grassland. Its broad-leaved and wide-spread nature make this weed competitive with the native pasture species. This leads to reduced grass yield. Moreover, its oxalic acid content makes this species poisonous for livestock if large doses are consumed. Therefore, early removal is preferred especially in organic dairy farms or conservation areas where mass spraying is prohibited.

The developed workflow uses a convolutional neural network to detect the location of the targeted weed species in the generated orthomosaics. Preliminary results were promising and had demonstrated the capability in mapping Rumex obtusifolius in datasets collected at various flight altitudes, camera settings and light conditions.

Setting up a UAV-based hyperspectral imaging system for precision agriculture

UAV-based hyperspectral systems are widely used in the field of agriculture, in particular precision agriculture. HSRW is developing a UAV-based hyperspectral imaging system for data acquisition from the visual to short-wave infrared (SWIR) parts of the electromagnetic spectrum.

The system mainly consists of two hyperspectral imaging sensors (Specim FX10 and Specim FX17), a high-resolution machine vision camera, and a compact GPS-Inertial Navigation System. A mechanical gimbal had been designed for sensor stabilization and for mounting the system to a DJI M600 Pro.

Initial tests were performed at a test field in Haus Riswick in Kleve, Germany, and the results had demonstrated the capability to produce hyperspectral data-cubes, high-resolution digital surface models and RGB orthomosaics. Several upcoming field campaigns are planned to further evaluate and improve the system.

Designing an easy-to-replicate UAV-borne lidar system for surveying applications

Lidar, which stands for light detection and ranging, is a remote sensing technology that uses extremely short pulses of laser and the known speed of light to accurately measure distances between the sensor and tens of thousands of points each second. By combining the position of the points relative to the sensor and the sensor’s absolute position, precise 3D models of the object/area of interest can be generated.

A wide array of custom electronics and software solutions has been developed for HSRW’s UAV-borne lidar system. Additional effort has been put into ensuring that any interested SMEs can easily replicate the custom parts and combine them with off-the-shelf components, thus integrating a working lidar system at a substantially lower cost than the existing commercial solutions. The goal is to equip SMEs with tools that can boost their competitiveness and effectiveness in areas such as surveying and structural integrity assessment of large industrial facilities. First flights for sensor calibration and data evaluation are planned for the end of spring 2020.

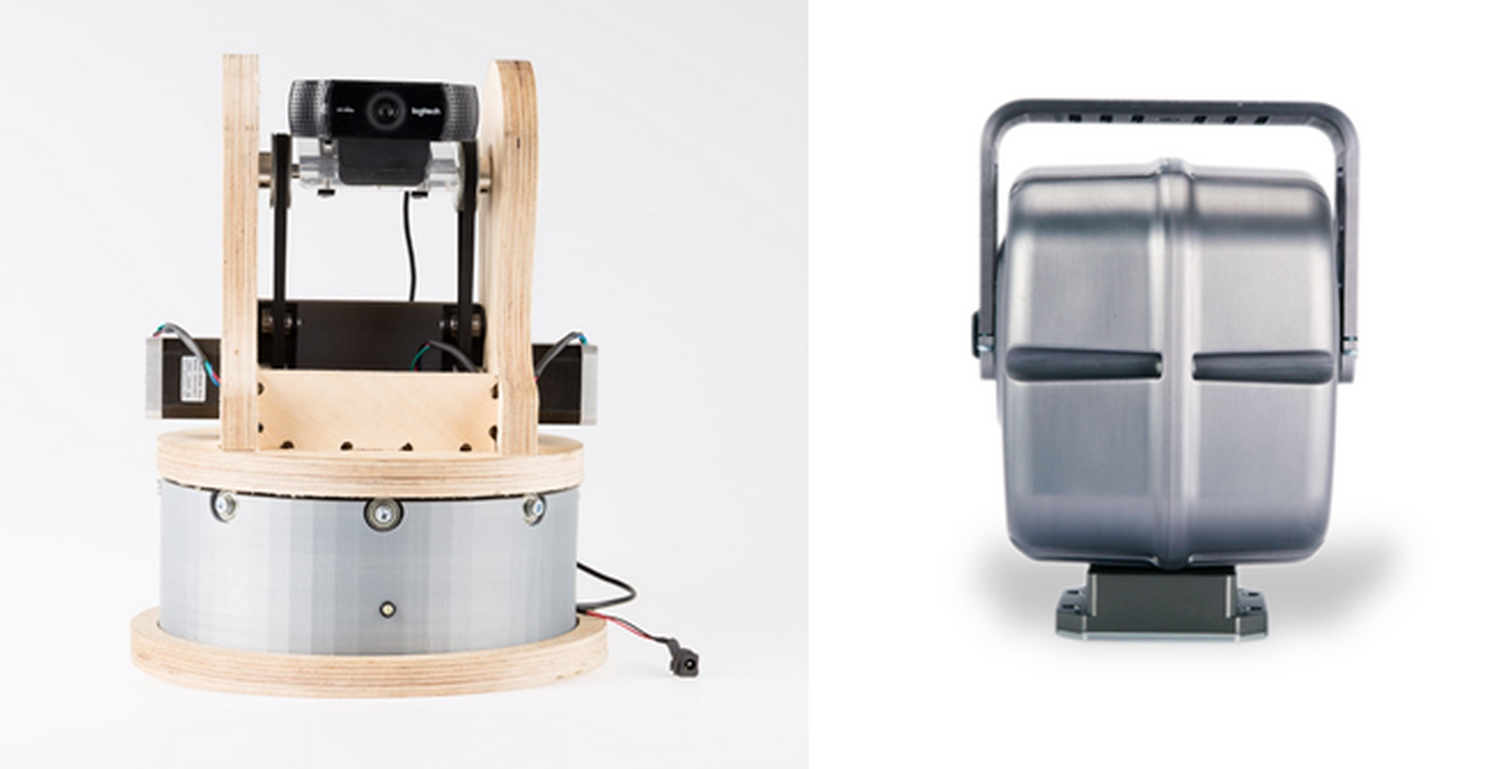

Laser tracker as an indoor positioning system

Indoor navigation of drones had always been challenging due to the GPS-denied environment, making it difficult to use UAVs in warehouses or greenhouses. To overcome this challenge, HSRW decided to investigate the potential of laser tracker which fuses both camera and laser to form one coherent view of the world which can graph the location of its mobile object in space. A prototype had been fabricated and the concept had successfully been proofed as it was able to track an object and measure the location of the target.

The following research involves using an industrial pan and tilt unit (FLIR PTU-5) to achieve better accuracy and higher axial accelerations. Promising results had been obtained by mounting a webcam on the top bracket and using color detection together with a proportional–integral–derivative (PID) controller for object tracking. The goal is to combine a laser measurement tool aligned with the camera objective to calculate the position of the moving object. Software improvements are ongoing with further tests for tracking a flying drone are planned by the end of spring 2020.

Indoor drone navigation using stereo vision

Another approach that HSRW considered was to set up a drone prototype platform that could navigate autonomously while spatially mapping the indoor environment. The idea is to make use of an on-board stereo camera, which is commonly used to reconstruct 3D information of the area of interest, to continuously determine the relative position of the drone. During this process, the companion computer also reconstructs a virtual environment of the indoor environment from the acquired images for analysis. In the end, the developed software can be deployed on any platform with a MAVLink-compliant flight controller.